| Robert M. FRENCH |

UMR 5022

LEAD

Pôle AAFE

Esplanade Erasme

BP 26513

21065 DIJON CEDEX

Tél:03

80 39 57 81

Fax:03 80 39 57 67

|

(Research Director, CNRS) L.E.A.D. - C.N.R.S. UMR 5022 Pôle AAFE Esplanade Erasme BP 26513 21065 DIJON CEDEX Tel. : +33 (0)3 80 39 90 65 Adm. Asst. Tel: +33 (0)3 80 39 39 65 / 57 81 Fax : +33 (0)3 80 39 57 67 Email: robert dot french round-at-sign u-bourgogne dot fr no-spaces |

|

Areas of research: Connectionist modeling, catastrophic interference,

categorization in children and infants, bilingualism, foundations of cognitive science (esp.

Turing Test), analogy-making, computational models of evolution. |

||

|

|

|

|

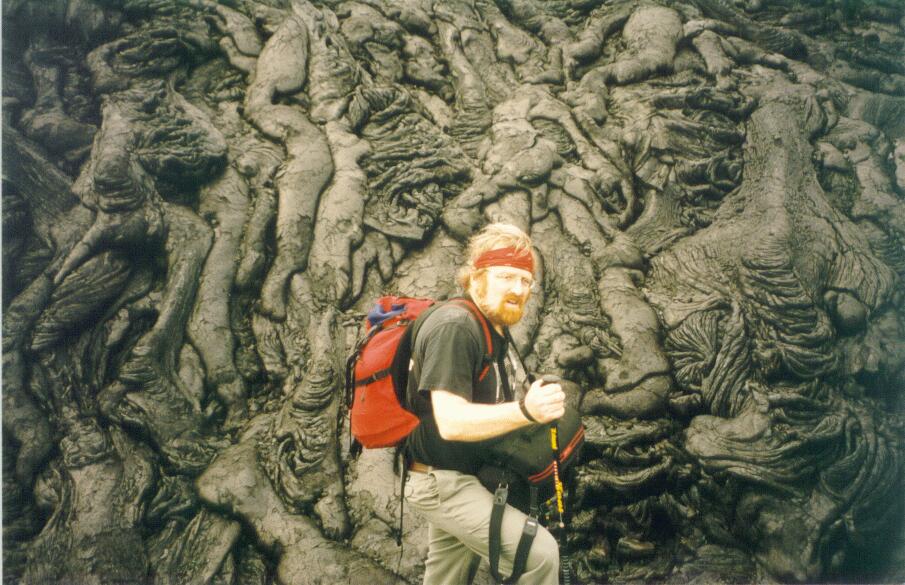

On the West Coast of Ireland..............................................In a zone of active lava flows on The Big Island, Hawaii Curiculum Vitae Click Here Grants: By clicking here you can see an abstract about the project. If more information about the project is available, it can be seen by clicking on the project name above the abstract. there is more information available about the project, you can get it by clicking on the project name. From Associations To Rules In The Development Of Concepts (FAR)

(European Commission Sixth Framework: NEST no. 516542)

Humans - The Analogy Making Species (European Commission Sixth

Framework: NEST-2004-Path-HUM) The Limits of Co-currence Analyses (Région de Bourgogne) Basic Mechanisms

of Learning and Forgetting in Natural and Artificial Systems

(European Commission 5th Framework project HPRN-CT-1999-00065) Workshops Organized: April 12-14, 2007: The Tenth Neural Computation and Psychology Workshop (NCPW10 was held in Dijon, France, and was organized by Bob French and Xanthi Skoura-Papaxanthis. Proceedings of this Workshop: (French, R. & Thomas, E. (eds.) (2008). FROM ASSOCIATIONS TO RULES: Connectionist Models of Behavior and Cognition Singapore: World Scientific can be seen by clicking here . September 16-18, 2000: The Sixth Neural Computation and Psychology Workshop (NCPW6) was held in Liège, Belgium, from. Proceedings of this Workshop (French, R. and Sougné, J. (eds.) (2001). Connectionist models of learning, development and evolution. Berlin: Springer-Verlag) can be seen by clicking here . March 28, 1998: IUAP Workshop "The role of implicit memory and implicit

learning in representing the world", Château de Colonster, University

of Liège, Liège, Belgium. This Workshop eventually led to

a book, entitled, Implicit Learning and Consciousness: An empirical, philosophical,

and computational consensus in the making? This book will be published

by Psychology Press and will appear in the fall of 2001. Introduction: "The

study of consciousness spans a host of disciplines ranging from philosophy

to neuroscience, from psychology to computer modeling. Arguments about consciousness

run the gamut from tenuous, even ridiculous, thought experiments to the

most rigorous neuroscientific experiments. This book offers a novel perspective

on many fundamental issues about consciousness based on empirical, computational

and philosophical research on implicit learning - learning without conscious

awareness of having learned..." For the complete introduction to the book,

please

click here

. Books:

Recent papers: Click on the name of the author to download the full paper. All papers are in pdf format and require Acrobat Reader to be read. (Acrobat Reader can be acquired free of charge at http://www.adobe.com/prodindex/acrobat/readstep.html). Please be aware that all papers that have been made available electronically are PRE-PRINTS and may contain differences with respect to their final, published versions which, for reasons of copyright ownership, cannot be put on this site. If the paper has appeared in print, the citation references are given. Clicking on a paper to download it constitutes an explicit request for a pre-print of that paper. For the final, published version of a paper, please refer to the references included in the pre-print and then acquire the paper through the journal in which it appeared. Index of papers available on this site By clicking here you can see the abstract of the paper. Then, if you wish to download the pre-print of the paper, click on the author name above the abstract. Categorization in Infants and Children

Catastrophic interference in connectionist networks

Neural Network modeling (general)

Analogy-making

From Associations To Rules In The Development Of Concepts (FAR) (European Commission Sixth Framework: NEST no. 516542) Denis Mareschal, Project Coordinator Robert French, Co-Coordinator Total Amount of the Grant: 1.2 M euros Duration: 3 years Human adults appear to differ from other animals by their ability to use

language to communicate, their use of logic and mathematics to reason, and their

ability to abstract relations that go beyond perceptual similarity. These

aspects of human cognition have one important thing in common: they are all

thought to be based on rules. This apparent uniqueness of human adult cognition

leads to an immediate puzzle: WHEN and HOW does this rule-based system come into

being? Perhaps there is, in fact, continuity between the cognitive processes of

non-linguistic species and pre-linguistic children on the one hand, and human

adults on the other hand. Perhaps, this transition is simply a mirage that

arises from the fact that Language and Formal Reasoning are usually described by

reference to systems based on rules (e.g., grammar or syllogisms). Humans - The Analogy-Making Species (European Commission Sixth Framework: NEST-2004-Path-HUM) Boicho Kokinov, Project Coordinator Robert French, Co-Coordinator Total Amount of the Grant: 1.8 M euros Duration: 3 years The ability to make analogies lies at the heart of human cognition and is a

fundamental mechanism that enables humans to engage in complex mental processes

such as thinking, categorization, and learning, and, in general, understanding

the world and acting effectively on it based on her/his past experience. This

project focuses on understanding these uniquely human mechanisms of

analogy-making, and exploring their evolution and development. A highly

experienced, interdisciplinary, and international team will study and compare

the performance of primates, infants, young children, healthy adults, as well as

children and adults with abnormal brain functioning. An interdisciplinary

methodology will be used to pursue this goal, one that includes computational

modeling, psychological experimentation, comparative studies, developmental

studies, and brain imaging. The Limits of Co-currence Analyses (Région de Bourgogne) Robert French & Valerie Camos, Project Co-coordinators Total Amount of the Grant: 139.500 euros Duration: 1 year The overall objective of this project is to study new applications for, as well as the limits of, text co-occurrence programs, such as, LSA (Landauer and Dumais, 1997), HAL (Lund & Burgess, 1996), and PMI-IR (Turney, 2001). French & Labiouse (2002) pointed out four problems with word co-occurrence programs — namely, i) the difficulties caused by the intrinsic deformability of semantic space, ii) the current inability of these programs to detect co-occurrences of abstract/relational structure, especially, especially distal relational structure, iii) their lack of essential world knowledge (e.g., fathers are always men, mothers always women), acquired by humans through learning or direct experience with the world and, finally, iv) their assumption of the atomic nature of words. We hope to use techniques drawn from statistics, from linguistics and from analogy-making to explore these four problems and to determine to what extent they can (or cannot) be handled by current co-occurrence programs. In addition, within these limits, we hope to examine new possibilities for the use of these types of programs. Basic Mechanisms of Learning and Forgetting in Natural and Artificial Systems (European Commission 5th Framework project HPRN-CT-1999-00065) Robert M. French, Project Director, Total Amount of the Grant: 980,000 euros, Duration: 4 years + 1 year extension. Completed: 2005. This five-year project received a 980,000 euro grant from the European Commission to carry out a highly multi-disciplinary theoretical, empirical, and computational study of the basic mechanisms of learning and forgetting. Participating members of the project were from the University of Warwick (Nick Chater) and Birkbeck College (Denis Mareschal) in England, the Université Libre de Bruxelles (Axel Cleeremans) and the Université de Liège (Bob French) in Belgium and the Université de Grenoble (Bernard Ans/Stephane Rousset) in France. The areas of inquiry that the project focused on ranged from theoretical mathematics at Warwick to fMRI studies at Grenoble, from implicit learning at Brussels to infant cognitive development at Birkbeck. The primary goal of the project was to train young European researchers in a variety of cognitive science research skills. Six Ph.D’s were awarded. Two post-docs in the project were awarded permanent faculty positions upo n conclusion of their work in the project. In addition, approximately 80 publications and 4 books were produced by members of the project. French, R. M. (2009). The Red Tooth Hypothesis: A computational model of predator-prey relations, protean escape behavior and sexual reproduction. Journal of Theoretical Biology. (in press) This paper presents an extension of the Red Queen Hypothesis (hereafter, RQH) that we call the Red Tooth Hypothesis (RTH). This hypothesis suggests that predator-prey relations may play a role in the maintenance of sexual reproduction in many higher animals. RTH is based on an interaction between learning on the part of predators and evolution on the part of prey. We present a simple predator-prey computer simulation that illustrates the effects of this interaction. This simulation suggests that the optimal escape strategy from the prey's standpoint would be to have a small number of highly reflexive, largely innate (and, therefore, very fast) escape patterns, but that would also be unlearnable by the predator. One way to achieve this would be for each individual in the prey population to have a small set of hard-wired escape patterns, but which were different for each individual. We argue that polymorphic escape patterns at the population level could be produced via sexual reproduction at little or no evolutionary cost and would be as, or potentially more, efficient than individual-level protean (i.e., random) escape behavior. We further argue that, especially under high predation pressure, sexual recombination would be a more rapid, and therefore more effective, means of producing highly variable escape behaviors at the population level than asexual reproduction. Nair, S. S, French, R. M., Laroche, D., Ornetti, P., and Thomas, E. (2009). The Application of Machine Learning Algorithms to the Analysis of Electromyographic Patterns from Arthritic Patients. IEEE Transactions on Neural Systems and Rehabilitation Engineering. (in press) The main aim of our study was to investigate the possibility of applying machine learning techniques to the analysis of electromyographic patterns (EMG) collected from arthritic patients during gait. The EMG recordings were collected from the lower limbs of patients with arthritis and compared with those of healthy subjects (CO) with no musculoskeletal disorder. The study involved subjects suffering from two forms of arthritis, viz, rheumatoid arthritis (RA) and hip osteoarthritis (OA). The analysis of the data was plagued by two problems which frequently render the analysis of this type of data extremely difficult. One was the small number of human subjects that could be included in the investigation based on the terms specified in the inclusion and exclusion criteria for the study. The other was the high intra- and inter-subject variability present in EMG data. We identified some of the muscles differently employed by the arthritic patients by using machine learning techni! ques t o classify the two groups and then identified the muscles that were critical for the classification. For the classification we employed leastsquares kernel (LSK) algorithms, neural network algorithms like the Kohonen self organizing map, learning vector quantification and the multilayer perceptron. Finally we also tested the more classical technique of linear discriminant analysis (LDA). The performance of the different algorithms was compared. The LSK algorithm showed the highest capacity for classification. Our study demonstrates that the newly developed LSK algorithm is adept for the treatment of biological data. The muscles that were most important for distinguishing the RA from the CO subjects were the soleus and biceps femoris. For separating the OA and CO subjects however, it was the gluteus medialis muscle. Our study demonstrates how classification with EMG data can be used in the clinical setting. While such procedures are unnecessary for the diagnosis of the type o! f arth ritis present, an understanding of the muscles which are responsible for the classification can help to better identify targets for rehabilitative measures.French, R. M. and Perruchet, P. (2009) Generating constrained randomized sequences: Item frequency matters. Behavior Research Methods. (in press). All experimental psychologists understand the importance of randomizing lists of items. However, randomization is generally constrained and these constraints, in particular, not allowing immediately repeated items, which are designed to eliminate particular biases, frequently engender others. We describe a simple Monte Carlo randomization technique that solves a number of these problems. However, in many experimental settings, we are concerned not only with the number and distribution of items, but also with the number and distribution of transitions between items. The above algorithm provides no control over this. We, therefore, introduce a simple technique using transition tables for generating correctly randomized sequences. We present an analytic method of producing item-pair frequency tables and item-pair transitional probability tables when immediate repetitions are not allowed. We illustrate these difficulties -- and how to overcome them -- with reference to a c! lassic paper on infant word segmentation. Finally, we make available an Excel file that allows users to generate transition tables with up to ten different item types, and to generate appropriately distributed randomized sequences of any length without immediately repeated elements. This file is freely available at: http://leadserv.u-bourgogne.fr/IMG/xls/TransitionMatrix.xls French, R. M. (2009). If it walks like a duck and quacks like a duck...The Turing Test, Intelligence and Consciousness. In P. Wilken, T. Bayne, A. Cleeremans (eds.). Oxford Companion to Consciousness, Oxford, UK: Oxford Univ. Press. 641-643. We could use the Turing Test as a way of providing a graded assessment, rather than an all-or-nothing decision, on the intelligence of machines. Thus, the further the machine’s answers were from average human answers on a series of questions that appeal to subcognitive associations derived from our interaction with the world, the less intelligent it would be (see: Subcognition and the Limits of the Turing Test). In like manner, the Turing Test could potentially be adapted to provide a graded test for human consciousness. The Interrogator would draw up a list of subcognitive question that explicitly dealt with subjective perceptions, like the question about holding Coca-Cola in one's mouth, about sensations, about subjective perceptions of a wide range of etc.. As before, the Interrogator would pose these questions to a large sample of randomly chosen people . And then, as for the graded Turing Test for intelligence, the divergence of the computer�! �s ans wers with respect to the average answers of the people in the random sample would constitute a “measure of human consciousness” with respect to our own consciousness. In short, the Turing Test, with an appropriately tailored set of questions, given first to a random sample of people, could be used to provide an operational means of assessing consciousness. Thibaut, J.-P., French, R. M., Vezneva, M. (2009). Cognitive Load and Analogy-making in Children: Explaining an Unexpected Interaction. Proceedings of the Thirtieth Annual Cognitive Science Socitey Conference. 1048-1053. The aim of the present study is to investigate the performance of children of different ages on an analogy-making task involving semantic analogies in which there are competing semantic matches. We suggest that this can be best studied in terms of developmental changes in executive functioning. We hypothesize that the selection of the common relational structure requires the inhibition of other salient features, such as, semantically related semantic matches. Our results show that children's performance in classic A:B::C:D analogy-making tasks seems to depend crucially on the nature of the distractors and the association strength between the A and B terms, on the one hand, and the C and D terms on the other. These results agree with an analogy-making account (Richland et al., 2006) based on varying limitations in executive functioning at different ages. Thibaut, J.-P., French, R. M., Vezneva, M. (2008). Analogy-Making in Children: The Importance of Processing Constraints. Proceedings of the Thirty-first Annual Cognitive Science Socitey Conference. 475-480. The aim of the present study is to investigate children's performance in an analogy-making task involving competing perceptual and relational matches in terms of developmental changes in executive functioning. We hypothesize that the selection of the common relational structure requires the inhibition of more salient perceptual features (such as identical shapes or colors). Most of the results show that children’s performance in analogy-making tasks would seem to depend crucially on the nature of the distractors. In addition, our results show that analogy-making performance depends on the nature of the dimensions involved in the relations (shape or color). Finally, in simple conditions, performance was adversely affected by the presence of irrelevant dimensions. These results are compatible with an analogy-making account (Richland et al., 2006) based on varying limitations in executive functioning at different ages. Gill, A. J., French, R. M., Gergle, D., Oberlander, J. (2008). Identifying Emotional Characteristics from Short Blog Texts. In Proceedings of the Thirtieth Annual Cognitive Science Conference, NJ:LEA. (accepted). Emotion is at the core of understanding ourselves and others, and the automatic expression and detection of emotion could enhance our experience with technologies. In this paper, we explore the use of computational linguistic tools to derive emotional features. Using 50 and 200 word samples of naturally-occurring blog texts, we find that some emotions are more discernible than others. In particular automated content analysis shows that authors expressing anger use the most affective language and also negative affect words; authors expressing joy use the most positive emotion words. In addition we explore the use of co-occurrence semantic space techniques to classify texts via their distance from emotional concept exemplar words: This demonstrated some success, particularly for identifying author expression of fear and joy emotions. This extends previous work by using finer-grained emotional categories and alternative linguistic analysis techniques. We relate our finding t! o huma n emotion perception and note potential applications. Gill, A. J., French, R. M., Gergle, D., Oberlander, J. (2008). Identifying Emotional Characteristics from Short Blog Texts. In Proceedings of the Thirtieth Annual Cognitive Science Conference, NJ:LEA. Emotion is at the core of understanding ourselves and others, and the automatic expression and detection of emotion could enhance our experience with technologies. In this paper, we explore the use of computational linguistic tools to derive emotional features. Using 50 and 200 word samples of naturally-occurring blog texts, we find that some emotions are more discernible than others. In particular automated content analysis shows that authors expressing anger use the most affective language and also negative affect words; authors expressing joy use the most positive emotion words. In addition we explore the use of co-occurrence semantic space techniques to classify texts via their distance from emotional concept exemplar words: This demonstrated some success, particularly for identifying author expression of fear and joy emotions. This extends previous work by using finer-grained emotional categories and alternative linguistic analysis techniques. We relate our finding t! o huma n emotion perception and note potential applications. Gill, A.J., Gergle, D., French, R.M., and Oberlander, J. (2008). Emotion Rating from Short Blog Texts. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI 2008). 1121-1124. New York: ACM Press. Being able to automatically perceive a variety of emotions from text alone has potentially important applications in CMC and HCI that range from identifying mood from online posts to enabling dynamically adaptive interfaces. However, such ability has not been proven in human raters or computational systems. Here we examine the ability of naive raters of emotion to detect one of eight emotional categories from 50 and 200 word samples of real blog text. Using expert raters as a ‘gold standard’, naive-expert rater agreement increased with longer texts, and was high for ratings of joy, disgust, anger and anticipation, but low for acceptance and ‘neutral’ texts. We discuss these findings in light of theories of CMC and potential applications in HCI. French, R. M. and Kus, E. (2008). KAMA: A Temperature-Driven Model of Mate Choice Using Dynamic Partner Representations. Adaptive Behavior, 16(1), 71-95. KAMA is a model of mate-choice based on a gradual, stochastic process of building up representations of potential partners through encounters and dating, ultimately leading to marriage. Individuals must attempt to find a suitable mate in a limited amount of time with only partial knowledge of the individuals in the pool of potential candidates. Individuals have multiple-valued character profiles, which describe a number of their characteristics (physical beauty, potential earning power, etc.), as well as preference profiles, that specify their degree of preference for those characteristics in members of the opposite sex. A process of encounters and dating allows individuals to gradually build up accurate representations of potential mates. Individuals each have a “temperature,” which is the extent to which they are willing to continue exploring mate-space and which drives individual decision making. The individual level mechanisms implemented in KAMA produce populatio! n-leve l data that qualitatively matches empirical data. Perhaps most significantly, our results suggest that differences in first-marriage ages and hazard-rate curves for men and women in the West may to a large extent be due to the Western dating practice whereby males ask women out and women then accept or refuse their offer. Delbé, C., French, R.M., Bigand, E. (2008). Catégorisation asymétrique de séquences de hauteurs musicales. Année Psychologique. (accepted, article in French). An unusual visual category learning asymmetry in infants was observed by Quinn, Eimas, & Rosenkrantz (1993). A series of experiments and simulations seemed to show that this asymmetry was due the perceptual inclusion of the cat category within the dog category because of the greater perceptual variability of the distributions of the visual features of dogs compared to cats (Mareschal & French, 1997; Mareschal, French, & Quinn, 2000; French, Mermillod, Quinn, & Mareschal, 2001; French, Mareschal, Mermillod, & Quinn, 2004). In the present paper, we explore whether this asymmetric categorization phenomenon generalizes to the auditory domain. We developed a series of sequential auditory stimuli analogous to the visual stimuli in Quinn et al. Two experiments on adult listeners using these stimuli seem to demonstrate the presence of an identical asymmetric categorization effect in the sequential auditory domain. Furthermore, connectionist simulations confirmed that purely bottom-up processes were largely responsible for our behavioural results. Cowell, R.A. & French, R. M. (2007). An unsupervised, dual-network connectionist model of rule emergence in category learning. In S. Vosniadou, D. Kayser, & A. Protopapas (eds.) Proceedings of the 2007 European Cognitive Science Society Conference. NJ:LEA. 318-323. We develop an unsupervised "dual-network" connectionist model of category learning in which rules gradually emerge from a standard Kohonen network. The architecture is based on the interaction of a statistical-learning (Kohonen) network and a competitive-learning rule network. The rules that emerge in the rule network are weightings of individual features according to their importance for categorization. Once the combined system has learned a particular rule, it de-emphasizes those features that are not sufficient for categorization, thus allowing correct classification of novel, but atypical, stimuli, for which a standard Kohonen network fails. We explain the principles and architectural details of the model and show how it works correctly for stimuli that are misclassified by a standard Kohonen network. Gill, A. & French, R. M. (2007). Semantic distance and author personality perception through texts. In S. Vosniadou, D. Kayser, A. Protopapas (eds.) Proceedings of the 2007 European Cognitive Science Society Conference. NJ:LEA. 682-687. We develop an unsupervised "dual-network" connectionist model of category learning in which rules gradually emerge from a standard Kohonen network. The architecture is based on the interaction of a statistical-learning (Kohonen) network and a competitive-learning rule network. The rules that emerge in the rule network are weightings of individual features according to their importance for categorization. Once the combined system has learned a particular rule, it de-emphasizes those features that are not sufficient for categorization, thus allowing correct classification of novel, but atypical, stimuli, for which a standard Kohonen network fails. We explain the principles and architectural details of the model and show how it works correctly for stimuli that are misclassified by a standard Kohonen network. French, R. M. (2008). Relational priming is to analogy-making as one-ball juggling is to seven-ball juggling. In Behavioral and Brain Sciences (in press) Relational priming is argued to be a deeply inadequate model of analogy-making because of its intrinsic inability to do analogies where the base and target domains share no common attributes and the mapped relations are different. The authors rely on carefully handcrafted representations to allow their model to make a complex analogy, seemingly unaware of the debate on this issue 15 years ago. Finally, they incorrectly assume the existence of fixed, context-independent relations between objects. French, R. M. (2007). The dynamics of the computational modeling of analogy-making. In The CRC Handbook of Dynamic Systems Modeling. Paul Fishwick (ed.), Boca Raton, FL: CRC Press LLC, ch. 2, 1-18. In this paper we will begin by introducing a notion of analogy-making that is considerably broader than the normal construal of this term. We will argue that analogy-making, thus defined, is one of the most fundamental and powerful capacities in our cognitive arsenal. We will claim that the standard separation of the representation-building and mapping phases cannot ultimately succeed as a strategy for modeling analogy-making. In short, the context-specific representations that we use in short-term memory — and that computers will someday use in their short-term memories — must arise from a continual, dynamic interaction between high-level knowledgebased processes and low-level, largely unconscious associative memory-processes. We further suggest that this interactive process must be mediated by context-dependent computational temperature, a means by which the system dynamically monitors its own activity, ultimately allowing it to settle on the appropriate representations for a given context. Abreu, A., French, R. M., Cowell, R. A. & de Schonen, S. (2006). Local-Global visual deficits in Williams Syndrome: Stimulus presence contributes to diminished performance on image-reproduction. Psychologica Belgica, 46(4), 269-281. Impairments in visuospatial processing exhibited by individuals with Williams Syndrome (WS) have been ascribed to a local processing bias. The imprecise specification of this local bias hypothesis has led to contradictions between different accounts of the visuospatial deficits in WS. We present two experiments investigating visual processing of geometric Navon stimuli by children with WS. The first experiment examined image reproduction in a visuoconstruction task and the second experiment explored the effect of manipulating global salience on recognition of visual stimuli by varying the density of local elements possessed by the stimuli. In the visuoconstruction task, the children with WS did show a local bias with respect to controls, but only when the target being copied was present; when drawing from memory, subjects with WS produced a heterogeneous pattern of answers. In the recognition task, children with WS exhibited the same sensitivity to global figures as matched controls, confirming previous findings in which no local bias in perception was found in WS subjects. We propose that subjects with WS are unable to disengage their attention from local elements during the planning stage of image reproduction (a visual-conflict hypothesis). Delbé, C., Bigand, E., French, R. M. (2006). Asymmetric Categorization in the Sequential Auditory Domain. An unusual category learning asymmetry in infants was observed by Quinn et al. (1993). Infants who were initially exposed to a series of pictures of cats and then were shown a dog and a novel cat, showed significantly more interest in the dog than in the cat. However, when the order of presentation was reversed — i.e., dogs were seen first, then a cat and a novel dog — the cat attracted no more attention than the novel dog. A series of experiments and simulations seemed to show that this asymmetry was due the perceptual inclusion of the cat category within the dog category because of the greater perceptual variability of dogs compared to cats (Mareschal & French, 1997; Mareschal et al., 2000; French et al., 2001, 2004). In the present paper, we explore whether this asymmetric categorization phenomenon generalizes to the auditory domain. We developed a series of sequential auditory stimuli analogous to the visual stimuli in Quinn et al. Two experiments on adult listeners using these stimuli seem to demonstrate the presence of an identical asymmetric categorization effect in the sequential auditory domain. Furthermore, we simulated these results with a connectionist model of sequential learning. Together with the behavioral data, we can conclude from this simulation that, as in the infant visual categorization experiments, purely bottom-up processes were largely responsible for our results. Delbé, C., Bigand, E., & French, R. M. (2006). Asymmetric Categorization in the Sequential Auditory Domain. In Proceedings of the 28th Annual Cognitive Science Society Conference. NJ:LEA. 1210-1215 An unusual category learning asymmetry in infants was observed by Quinn et al. (1993). Infants who were initially exposed to a series of pictures of cats and then were shown a dog and a novel cat, showed significantly more interest in the dog than in the cat. However, when the order of presentation was reversed — i.e., dogs were seen first, then a cat and a novel dog — the cat attracted no more attention than the novel dog. A series of experiments and simulations seemed to show that this asymmetry was due the perceptual inclusion of the cat category within the dog category because of the greater perceptual variability of dogs compared to cats (Mareschal & French, 1997; Mareschal et al., 2000; French et al., 2001, 2004). In the present paper, we explore whether this asymmetric categorization phenomenon generalizes to the auditory domain. We developed a series of sequential auditory stimuli analogous to the visual stimuli in Quinn et al. Two experiments on adult listene! rs usi ng these stimuli seem to demonstrate the presence of an identical asymmetric categorization effect in the sequential auditory domain. Furthermore, we simulated these results with a connectionist model of sequential learning. Together with the behavioral data, we can conclude from this simulation that, as in the infant visual categorization experiments, purely bottom-up processes were largely responsible for our results. French, R. M., Kus, E.T., (2006). Modeling Mate-Choice using Computational Temperature and Dynamically Evolving Representations. In Proceedings of the 28th Annual Cognitive Science Society Conference, NJ:LEA. We present a model of mate-choice (KAMA) that is based on a gradual, stochastic process of representation-building leading to marriage. KAMA reproduces empirically verifiable population-level mate-selection behavior using individual-level mate-choice mechanisms. Individuals have character profiles, which describe a number of their characteristics (physical beauty, potential earning power, etc.), as well as preference profiles, that specify their degree of preference for those characteristics in members of the opposite sex. A process of encounters and dating serves to exchange information and allows accurate representations of potential mates to be gradually built up over time. Finally, individuals each have a "temperature", which is the extent to which they are willing to continue exploring mate-space. "Temperature" (the inverse of mate "choosiness") drives individual decision-making in this model. We show that the individual-level mechanisms implemented in the model prod! uce population-level data that qualitatively matches empirical data. French, R. M. (2006). The dynamics of the computational modeling of analogy-making. In this paper we will begin by introducing a notion of analogy-making that is considerably broader than the normal construal of this term. We will argue that analogy-making, thus defined, is one of the most fundamental and powerful capacities in our cognitive arsenal. We will claim that the standard separation of the representation-building and mapping phases cannot ultimately succeed as a strategy for modeling analogy-making. In short, the context-specific representations that we use in short-term memory — and that computers will someday use in their short-term memories — must arise from a continual, dynamic interaction between high-level knowledge-based processes and low-level, largely unconscious associative memory-processes. We further suggest that this interactive process must be mediated by context-dependent computational temperature, a means by which the system dynamically monitors its own activity, ultimately allowing it to settle on the appropriate representations for a given context. Abreu, A. M., French, R. M., Annaz, D., Thomas, M., De Schonen, S. (2005).A "Visual Conflict" Hypothesis for Global-Local Visual Deficits in Williams Syndrome: Simulations and Data Individuals with Williams Syndrome demonstrate impairments in visuospatial cognition. This has been ascribed to a local processing bias. More specifically, it has been proposed that the deficit arises from a problem in disengaging attention from local features. We present preliminary data from an integrated empirical and computational exploration of this phenomenon. Using a connectionist model, we first clarify and formalize the proposal that visuospatial deficits arise from an inability to locally disengage. We then introduce two empirical studies using Navon-style stimuli. The first explored sensitivity to local vs. global features in a perception task, evaluating the effect of a manipulation that raised the salience of global organization. Thirteen children with WS exhibited the same sensitivity to this manipulation as CA-matched controls, suggesting no local bias in perception. The second study focused on image reproduction and demonstrated that in contrast to controls, the children with WS were distracted in their drawings by having the target in front of them rather than drawing from memory. We discuss the results in terms of an inability to disengage during the planning stage of reproduction due to over-focusing on local elements of the current visual stimulus. French, R. M., Mareschal, D., Mermillod, M., & Quinn, P. C. (2004) . The Role of Bottom-up Processing in Perceptual Categorization by 3- to 4-month-old Infants: Simulations and Data. Journal of Experimental Psychology: General. Disentangling bottom-up and top-down processing in adult category learning is notoriously difficult. Studying category learning in infancy provides a simple way of exploring category learning while minimizing the contribution of top-down information. Three- to four-month-old infants presented with cat or dog images will form a perceptual category representation for Cat that excludes dogs and for Dog that includes cats. We argue that an inclusion relationship in the distribution of features in the images explains the asymmetry. Using computational modeling and behavioral testing, we show that the asymmetry can be reversed or removed by using stimulus images that reverse or remove the inclusion relationship. The findings suggest that categorization of non-human animal images by young infants is essentially a bottom-up process. French, R. M. & Jacquet, M. (2004) . All cases of word production are not created equal: a reply to Costa & Santesteban, Trends in Cognitive Sciences. While we are not necessarily in disagreement with the comment by Costa and Santesteban, neither are we as convinced as they are of the need for two modalities, one for word production, the other for word recognition. Their key claim is that “in word production, it is the speaker who intentionally chooses the target language.” Perhaps at the moment of actually switching languages, one could argue for a need for top-down intentional switching mechanism. But during most language production, simpler, automatic mechanisms of word activation - identical to those at work in word recognition - would suffice to keep the bilingual in one or the other language. Each word in a particular language whether it is spoken or heard, activates a halo of other words -- virtually all of which are in the same language -- and, as a result, it requires no particular intentional effort for a bilingual to remain in that language. French, R. M. (2008) . Review of Neuroconstructivism: How the brain constructs cognition by Mareschal et al (2007). This book is an excellent manifesto for future work in child development. It presents a multidisciplinary approach that clearly demonstrates the value of integrating modeling, neuroscience, and behavior to explore the mechanisms underlying development and to show how internal context-dependent representations arise and are modified during development. Its only major flaw is to have given short shrift to the study of the role of genetics on development. French, R. M. (2004) . For historians of automated computing only: A review of Who Invented The Computer? The Legal Battle That Changed Computing History by Alice Rowe Burks. Endeavour. John Mauchly and J. Presper Eckert are widely thought to have invented the electronic computer as we know it today. Burks’s book describes in (sometimes excruciating) detail the 1971 court case that established the claim that John V. Atanasoff got their first with the invention of a special-purpose, electronic computing device, many of whose key features were included Mauchly and Eckert’s ENIAC, without giving credit to Atanasoff. For this reason the Mauchly and Eckert patent was invalidated. Unfortunately, the book doesn’t really deal with the question in its title, but rather gives us a blow-by-blow analysis of the 1971 court case. While this book provides a thorough treatment of Honeywell vs. Sperry-Rand and Atanasoff’s contribution to the modern computer, it is certainly not the place for a lay reader to find out about who invented the computer. French, R. M. & Jacquet, M. (2004) . Understanding Bilingual Memory: Models and Data. Trends in Cognitive Sciences, 8(2), 87-93. The first attempts to put the study of bilingual memory on a sound scientific footing date only from the beginning of the 1950’s and only in the last two decades has the field of really come into its own. Our focus is primarily, if not exclusively, experimental and computational studies of bilingual memory. Bilingual memory research in the last decade and, particularly, in the last five years, has developed the experimental, neuropsychological and computational tools to allow researchers to begin to answer some of the field’s major outstanding questions, thus effectively bringing to an end to the endless thrust-counterthrust on these questions that previously characterized the field. This article is organized along the lines of the conceptual division suggested by François Grosjean: language knowledge and organization, on the one hand, and the mechanisms that operate on that knowledge and organization, on the other. Various connectionist models of bilingual memory that attempt to incorporate both organizational and operational considerations will serve to bridge these two divisions. Kokinov, B. and French, R. M. (2003) . Computational Models of Analogy-making. In Nadel, L. (Ed.) Encyclopedia of Cognitive Science. Vol. 1, pp.113 - 118. London: Nature Publishing Group. The field of computer-modeling of analogy-making has moved from the early models which were intended mainly as existence proofs to demonstrate that computers could, in fact, be programmed to do analogy-making to complex models which make nontrivial predictions of human behavior. Researchers have come to appreciate the need for structural mapping of the base and target domains, for integration of and interaction between representation-building, retrieval, mapping and learning, and for building systems that can potentially scale up to the real world. Mermillod, M., French, R. M., Quinn, P. & Mareschal, D., (2003) . The Importance of Long-term Memory in Infant Perceptual Categorization. Proc. of the 25th Annual Conference of the Cognitive Science Society. NJ:LEA 804-809. Quinn and Eimas (1998) reported that young infants include non-human animals (i.e., cats, horses, and fish) in their category representation for humans. To account for this surprising result, it was proposed that the representation of humans by infants functions as an attractor for non-human animals and is based on infants’ previous experience with humans. We report three simulations that provide a computational basis for this proposal. These simulations show that that a “dual-network” connectionist model that incorporates both bottom-up (i.e., short-term memory) and top-down (i.e., long-term memory) processing is sufficient to account for the empirical results obtained with the infants. Van Rooy, D., Van Overwalle, F., Vanhoomissen, T., Labiouse, C., & French, R. M. (2003) . A Recurrent Connectionist Model of Group Biases. Psychological Review , 110, 536-563. Major biases and stereotypes in group judgments are reviewed and modeled from a recurrent connectionist perspective. These biases are in the areas of group impression formation (illusory correlation), group differentiation (accentuation), stereotype change (dispersed versus concentrated distribution of inconsistent information), and group homogeneity. All these phenomena are illustrated with well-known experiments, and simulated with an auto-associative network architecture with linear activation update and delta learning algorithm for adjusting the connection weights. All the biases were successfully reproduced in the simulations. The discussion centers on how the particular simulation specifications compare to other models of group biases and how they may be used to develop novel hypotheses for testing the connectionist modeling approach and, more generally, for improving theorizing in the field of social biases and stereotype change. French, R. M. (2003) Catastrophic Forgetting in Connectionist Networks. In Nadel, L. (Ed.) Encyclopedia of Cognitive Science. Vol. 1, pp. 431 - 435. London: Nature Publishing Group. Unlike human brains, connectionist networks can forget previously learning information suddenly and completely (i.e., “catastrophically”) when learning new information. Various solutions for overcoming this problem are discussed. Article discusses: Catastrophic forgetting vs. normal forgetting, measures of catastrophic interference, solutions to the problem, rehearsal and pseudorehearsal, other techniques for alleviating catastrophic forgetting in neural networks, etc. French, R. M. (2002) Natura non facit saltum: The need for the full continuum of mental representations. The Behavior and Brain Sciences. 25(3), 339-340. Our major disagreement with the SOC model proposed by Perruchet and Vinter is that it requires conscious representations to emerge in a sudden, quantal leap from the unconscious to the conscious. We suggest that the SOC model might do well to turn to basic neural network principles that would allow it, without difficulty, to encompass unconscious representations. These “unconscious” representations some of which may evolve into representations that, when activated, would be conscious can affect consciousness processing, but do so via the same basic associative, excitatory, and inhibitory mechanisms that we observe in conscious representations. The inclusion of this type of representation in no way requires the authors to also posit sophisticated unconscious computational mechanisms. Jacquet, M. & French, R. M. (2002) . The BIA++: Extending the BIA+ to a dynamical distributed connectionist framework. Bilingualism, 5(3), 202-205. Dijkstra and van Heuven have made an admirable attempt to develop a new model of bilingual memory, the BIA+. The BIA+ is, as the name implies, an extension of the Bilingual Interactive Activation (BIA) model (Dijkstra & van Heuven, 1998; Van Heuven, Dijkstra & Grainger, 1998; etc), which was itself an adaptation to bilingual memory of McClelland & Rumelhart’s (1981) Interactive Activation model of monolingual memory. The authors provide a wealth of background on bilingual memory cross-lingual interference and priming effects in what amounts to a veritable review of the literature in this area. The model that they propose is designed to account for many of these empirically observed effects. In this article we focus our discussion around three points related to the design of their model. These issues are:

We hope that the ground-breaking work of these authors will naturally evolve towards broader-based distributed connectionist network models and related dynamical models of bilingual memory, capable of learning and being able to incorporate both the bottom-up and the top-down processing that we know to be in integral part of bilingual language processing. Labiouse, C., French, R. M. and Mermillod, M. (2002) . Using Autoencoders to Model Asymmetric Category Learning in Early Infancy: Insights from Principal Components Analysis. In J.A. Bullinaria, & W. Lowe (Eds.).Connectionist Models of Cognition and Perception: Proceedings of the Seventh Neural Computation and Psychology Workshop , Singapore: World Scientific, 51-63. Young infants exhibit intriguing asymmetries in the exclusivity of categories

formed on the basis of visually presented stimuli. For instance, infants

who have previously seen a series of cats show a surge of interest when

looking at dogs, this being interpreted as dogs being perceived as novel.

On the other hand, infants previously exposed to dogs do not exhibit such

an increased interest for cats. Recently, researchers have used simple autoencoders

to account for these effects. Their hypothesis was that the asymmetry effect

is caused by the smaller variances of cats’ features and an inclusion

of the values of the cats’ features in the range of dogs’ values. They

predicted, and obtained, a reversal of asymmetry by reversing dog-cat variances,

thereby inversing the inclusion relationship (i.e. dogs are now included

in the category of cats). This reversal reinforces their hypothesis. We

will examine the explanatory power of this model by investigating in greater

detail the ways by which autoencoders exhibit Ans, B., Rousset, S., French, R. M., & Musca, S. (2002) . Preventing Catastrophic Interference in Multiple-Sequence Learning Using Coupled Reverberating Elman Networks. Proceedings of the 24th Annual Conference of the Cognitive Science Society. NJ:LEA Everyone agrees that real cognition requires much more than static pattern recognition. In particular, it requires the ability to learn sequences of patterns (or actions) But learning sequences really means being able to learn multiple sequences, one after the other, without the most recently learned ones erasing the previously learned ones. But if catastrophic interference is a problem for the sequential learning of individual patterns, the problem is amplified many times over when multiple sequences of patterns have to be learned consecutively, because each new sequence consists of many linked patterns. In this paper we will present a connectionist architecture that would seem to solve the problem of multiple sequence learning using pseudopatterns. French, R. M. & Labiouse, C. (2002) . Four Problems with Extracting Human Semantics from Large Text Corpora. Proceedings of the 24th Annual Conference of the Cognitive Science Society . NJ:LEA. We present four problems that will have to be overcome by text co-occurrence programs in order for them to be able to capture human-like semantics. These problems are: the intrinsic deformability of semantic space, the inability to detect co-occurrences of (esp. distal) abstract structures, their lack of essential world knowledge, which humans acquire through learning or direct experience with the world and their assumption of the atomic nature of words. By looking at a number of very simple questions, based in part on how humans do analogy-making, we show just how far one of the best of these programs is from being able to capture real semantics. French, R. M., Mermillod, M., Quinn, P., Chauvin, A., & Mareschal, D. (2002) . The Importance of Starting Blurry: Simulating Improved Basic-Level Category Learning in Infants Due to Weak Visual Acuity. Proceedings of the 24th Annual Conference of the Cognitive Science Society. NJ:LEA. At the earliest ages of development, perceptual maturation is generally considered as a functional constraint to recognize or categorize the stimuli of the environment. However, using a computer simulation of retinal development using Gabor wavelets to simulate the output of the V1 complex cells (Jones & Palmer, 1987), we showed thatucing the range of the spatial frequencies from the retinal map to V1 decreases the variance distribution within a category. The consequence of this is to decrease the difference between two exemplars of the same category, but to increase the difference between exemplars from two different categories. These results show that reduced perceptual acuity produces an advantage for differentiating basic-level categories. Finally, we show that the present simulations using Gabor-filtered input instead of feature-based input coding provide a pattern of statistical data convergent with previously published results in infant categorization (e.g., Mareschal & French, 1997 ; Mareschal et al, 2000 ; French et al, 2001 ). Mareschal, D., Quinn, P., & French, R. M., (2002) . Asymmetric interference in 3- to 4-month olds’ sequential category learning. Cognitive Science, 26, 377-389. Three- to 4-month-old infants show asymmetric exclusivity in the acquisition

of Cat and Dog perceptual categories. We describe a connectionist autoencoder

model of perceptual categorization that shows the same asymmetries as infants.

The model predicts the presence of asymmetric catastrophic interference

(retroactive interference) when infants acquire Cat and French, R. M.(2002). The Computational Modeling of Analogy-Making. Trends in Cognitive Sciences, 6(5), 200-205. Our ability to see a particular object or situation in one context as being “the same as” another object or situation in another context is the essence of analogy-making. It encompasses our ability to explain new concepts in terms of already-familiar ones, to emphasize particular aspects of situations, to generalize, to characterize situations, to explain or describe new phenomena, to serve as a basis for how to act in unfamiliar surroundings, to understand many types of humor, etc. Within this framework, the importance of analogy-making in modeling cognition becomes clear. This article is a survey of (essentially) all of the computational models of analogy-making developed over the course of the last four decades. French, R. M. and Chater, N. (2002). Using Noise to Compute Error Surfaces in Connectionist Networks: A Novel Means of Reducing Catastrophic Forgetting. Neural Computation, 14 (7), 1755-1769. In error-driven distributed feedforward networks new information typically interferes, sometimes severely, with previously learned information. We show how noise can be used to approximate the error surface of previously learned information. By combining this approximated error surface with the error surface associated with the new information to be learned, the network’s retention of previously learned items can be improved and catastrophic interference significantly reduced. Further, we show that the noise -generated error surface is produced using only first-derivative information and without recourse to any explicit error information. French, R. M. and Labiouse, C. (2001) . Why co-occurrence information alone is not sufficient to answer subcognitive questions. Journal of Theoretical and Experimental Artificial Intelligence, 13(4), 419-429. Turney (2001) claims that a simple program, PMI-IR, that searches the World Wide Web for co-occurrences ollion Web pagesused to find human-like answers to the type of “subcognitive” questions French (1990) claimed would invariably unmask computers (that had not lived life as we humans had) in a Turing Test. In this paper, we show that there are serious problems with Turney’s claim. We show by example that PMI-IR doesn’t work for even simple subcognitive questions. We attribute PMI-IR’s failure to its inability to understand the relational and contextual attributes of the words/concepts in the queries. And finally, we show that, even if PMI-IR were able to answer many subcognitive questions, a clever Interrogator in the Turing Test would still be able to unmask the computer. Labiouse, C. & French, R. M. (2001). A Connectionist Model of Person Perception and Stereotype Formation. In Connectionist Models of Learning, Development and Evolution. (R. French & J. Sougné, eds.). London: Springer, 209-218. Connectionist modeling has begun to have an impact on research in social cognition. PDP models have been used to model a broad range of social psychological topics such as person perception, illusory correlations, cognitive dissonance, social categorization and stereotypes. Smith and DeCoster [28] recently proposed a recurrent connectionist model of person perception and stereotyping that accounts for a number of phenomena usually seen as contradictory or difficult to integrate into a single coherent conceptual framework. While their model is based on clearly defined and potentially far-reaching theoretical principles, it nonetheless suffers from certain shortcomings, among them, the use of misleading dependent measures and the incapacity of the network to develop its own internal representations. We propose an alternative connectionist model - an autoencoder - to overcome these limitations. In particular, the development of stereotypes within the context of this model will be discussed. French, R. M., Ans, B., & Rousset, S. (2001). Pseudopatterns and dual-network memory models: Advantages and shortcomings. In Connectionist Models of Learning, Development and Evolution. (R. French & J. Sougné, eds.). London: Springer, 13-22. The dual-network memory model is designed to be a neurobiologically plausible manner of avoiding catastrophic interference. We discuss a number of advantages of this model and potential clues that the model has provided in the areas of memory consolidation, category-specific deficits, anterograde and retrograde amnesia. We discuss a surprising result about how this class of models handles episodic ("snap-shot") memory - namely, that they seem to be able to handle both episodic and abstract memory - and discuss two other promising areas of research involving these models. French, R. M. & Thomas, E. (2001). The Dynamical Hypothesis in Cognitive Science: A review essay of Mind As Motion. In Minds and Machines, 11, 1, 101-111. Sometimes it is hard to know precisely what to think about the Dynamical

Hypothesis, the new kid on the block in cognitive science and described

succinctly by the slogan "cognitive agents are dynamical systems." Is the

DH a radically new approach to understanding human cognition? (No.) Is it

providing deep insights that traditional symbolic artificial intelligence

overlooked? (Certainly.) Is it providing deep insights that recurrent connectionist

models, circa 1990, had overlooked? (Probably not.) Is time, as instantiated

in the DH, necessary to our understanding of cognition? (Certainly.) Is

time, as instantiated in the DH, sufficient for understanding cognition?

(Certainly not.). . . The DH is often touted as being a revolutionary alternative

to the traditional Physical Symbol System Hypothesis (PSSH, renamed in

Mind As Motion , the Computational Hypothesis) (Newell & Simon,

1976) that was the bedrock of artificial intelligence for 25 years. We disagree.